Humans have a wonderful ability to play out scenarios in our head before they happen. We can imagine in our mind the path our arm would take as it sweeps across a desk to pick up a cup of coffee. Or picture our fingers plucking a tricky guitar riff. Professional athletes use ‘mental practise’ to envision themselves executing the sequence of steps needed to perform an advanced maneuver.

These are all illustrations of how our mind creates a model of the world we interact with. In machine learning, this is referred to as an agent’s world model. This model shows us how events might unfold before those events have happened, and its predictions are based on what we’ve encountered before. It’s the reason why bouncing on a trampoline is sensible but bouncing on a sidewalk seems silly – it doesn’t conform to our world model. However, virtual characters do not typically have this world model, which could explain why animating them in a virtual, physically simulated environment has historically been so difficult.

Inspired by this idea, we present a world model-based method for learning physical, interactive character controllers. We found that world models are not only faster than previous approaches for training character controllers, but can easily scale to a large numbers of motions in a single controller.

A Ragdoll’s World

A ragdoll is a coarse representation of a character constructed from simple physical objects such as spheres and capsules, connected using motorized joints. Ragdolls help bridge the gap between a game character and the physical world that character occupies.

For example, when a character falls over or is hit by flying debris, a ragdoll can be used to determine how that character might respond to the interaction. In the game Steep, ragdolls were used to help produce realistic motions when the character fell, as shown in the clip on the left. They can also make subtle motions of game characters feel more immersive, as shown in the clip on the right where the character riding on the sled is jostled by forces from the terrain and the acceleration of the sled.

In the last few years, machine learning researches have successfully applied neural networks to the task of modeling complex dynamical systems. We were interested in whether these ragdoll motions could be modelled by a neural network and what the applications for such a neural network were.

By collecting hundreds of thousands of different transitions of these systems, a neural network can be trained to faithfully recreate the movement of fluids, the flow of clothing on a character, or the motion of rigid objects. A human ragdoll might have fewer moving parts than a fluid simulation, but the constraints and contacts in its motions can make it difficult to model.

Each of the major body parts of a character is represented by what is called a rigid body. These rigid bodies are affected by forces due to gravity and contacts with the ground, so we need some way for them to stay together. A joint does this by constraining the relative motion between any two rigid bodies.

For the human ragdoll this is analogous to a simplified muscle in a real human. Just as you can move your own arm by activating your muscles, a ragdoll can move its rigid bodies by driving the joints like motors of a robot. These motorized joints can be used to drive the ragdoll to be in different poses, or to even animate the ragdoll:

How complex is the motion of a ragdoll? As you walk across a room, the orientation and position of your hands, waist, head, and every other body part change over time. We refer to all these parts that describe you at a specific point in time as your pose. This sequence of changes for one body part defines a curve, or trajectory.

All these trajectories for all your body parts together define your motion as you move. If we want to use machine learning to model the motion of a ragdoll, that neural network must be able to reproduce these trajectories as accurately as possible for all the different ways a ragdoll might move. That’s not an easy task.

![[La Forge] SuperTrack – Motion Tracking for Physically Simulated Characters using Supervised Learning -](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/79pkNDzPHqlr1qZM7Q4KDY/d2354a3c5d055225dc2b4425960fbb21/-La_Forge-_rollouts_white.png)

It turns out that if we have an appropriate representation of a ragdoll and a large enough neural network, we can train the neural network to predict the dynamics of a ragdoll over short trajectories. Our neural network will take as input the current pose of the ragdoll at a certain frame (in yellow), as well as the motor settings for driving the character to the next frame (in blue) and will predict the next pose of ragdoll (in red).

![[La Forge] SuperTrack – Motion Tracking for Physically Simulated Characters using Supervised Learning -](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/7KtwR5iGAA9VRSj7Boytzx/b3a415d8e7acafbf4d6ccd897dbf4784/-La_Forge-_world_model_pred1-1.png)

The naive way to train this neural network (or world model) is to compare its prediction of the next pose of the ragdoll to the correct next pose of the ragdoll and adjust the neural network parameters so that the prediction is closer to the correct pose. In practise, for a complex system this approach to training can lead to instabilities in the final results.

Shown below is the result of this approach applied to predicting the trajectories of a walking ragdoll motion. The red character is the prediction of the world model over a one second horizon, and the yellow character is the ragdoll that is being modeled:

As you can see, the world model doesn’t do a great job at tracking the ragdoll motion. This gives us some idea of the challenges needed to train a neural network to track a ragdoll’s motion. One problem is that as the world model makes predictions that are further into the future, the errors for each prediction compound and cause the predicted trajectories to deviate from the target trajectories.

But this can be corrected by giving the neural network information about its previous predictions so that it can learn to adjust for errors in its future predictions. To do this, we train the neural network over a short window of frames where the future predictions of the world model are fed back as inputs during training, like so:

![[La Forge] SuperTrack – Motion Tracking for Physically Simulated Characters using Supervised Learning - wm_rollouts_v3](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/5InpoY9lsftdKOvi4GvTyH/ec5558d681c2c1bd82aa74ca13a7f47e/-La_Forge-_wm_rollouts_v3.png)

If we train the world model over a short window of frames, the results are now stable, and the world model can predict the motion of the ragdoll accurately over the one-second horizon.

Although the prediction quality starts to drift after one second, this is long enough to use this world model to build a motion tracking character controller.

Bringing a Ragdoll to Life

For our character controller, we want to have the ragdoll be able to perform a variety of difficult motions such as walking, jumping, or even tango dancing. To do this we need two things: an objective that tells the ragdoll how to achieve these motions, and a controller that enables the ragdoll to achieve the objective.

For the objective, we are going to use a target animation and compute how close each frame of the ragdoll motion is to each frame of the target animation. For instance, if we wanted a ragdoll to run, we would have an objective that computes the difference between the ragdoll pose and the target animation pose for each frame in a running animation:

The controller is then tasked with determining the ragdoll’s motor settings at every frame so that it achieves the objective. In general, the controller can be thought of as a function that outputs the motor settings given the current pose of the ragdoll and the target pose from the animation that we want the ragdoll to be in. This type of controller is called a policy and is represented by a second neural network:

![[La Forge] SuperTrack – Motion Tracking for Physically Simulated Characters using Supervised Learning - policy_image](http://staticctf.ubisoft.com/J3yJr34U2pZ2Ieem48Dwy9uqj5PNUQTn/68xVh1CAOMk58S2Hd7X1Ny/e18bf6143155d44308ee123441d743a3/-La_Forge-_policy_image.png)

We can now train the policy to improve itself on the objective of animation tracking. The ideal way to train the policy for this objective would be to have the policy drive the ragdoll for a certain number of steps in the simulation, compute the objective at each step, and then use backpropagation to improve the policy. This would be repeated thousands of times to gradually improve the policy’s performance. But there is a problem with this approach that means we can’t use it in practise.

To use backpropagation, every computation for the policy and the simulation must be differentiable. In this context that means we can directly compute an adjustment to the weights of the policy neural network which should improve its performance on the objective. For the majority of game engines, almost all physics simulators used for games are not differentiable because of how they handle things like collision resolution and friction. But if we recall, our world model from earlier is a good approximation to the motion of our ragdoll in the physics simulation.

Further, because it is a neural network, it is also differentiable. Therefore, we can replace the physical simulation of the ragdoll with a world model and make the entire system differentiable:

There are some other implementation details like avoiding vanishing gradients and determining the number of simulation steps the ragdoll should take, but overall, the pipeline is well suited for the objective of having the ragdoll track a target animation. If you are interested in the details, take a look at the accompanying paper linked at the end of this post.

As an example, let’s use this approach to make the ragdoll run. The objective will be for the ragdoll motion to closely match a motion capture animation of a person running. By training a world model on the ragdoll’s motion and then training a policy through the world model, the ragdoll is able to track the running animation with stable, high-quality motion:

The advantage of training a policy in this way is that it is far more sample efficient than approaches that don’t use a world model, and the final policy produces higher-quality tracking results due to a more direct learning signal provided by the world model.

Breakdancing, Karate, and Running Around

We can scale this approach to work on more than just a single running animation. For this experiment we used the LaFan dataset which contains over four-and-a-half hours of motion capture for video game-related motions such as running, fighting, rolling, and wielding items. To avoid having to first train a world model and then use the world model to train a policy for these motions, we can instead train both the world model and the policy at the same time to reduce training overhead.

First, a large batch of random animation trajectories is sampled from the animation dataset. Next, the policy is used to control the ragdoll to try to track the batch of animation trajectories. The transition information of the ragdoll motions and corresponding animations are stored in a large replay buffer. This replay buffer of ragdoll and animation transitions is then used to train the world model by sampling a batch of random trajectories from it.

Finally, the world model and the sampled animations are used to train the policy. These steps are then repeated with a new batch of randomly-sampled animation trajectories. Neither the policy nor the world model require training on samples from the most recent policy, so the data sampling and training can be done in parallel, as demonstrated in the graphic below. This is unlike the more sample-inefficient reinforcement learning methods which have a policy and a value function that must be kept in sync with each other.

After only forty hours of training on the LaFan dataset, the policy can track 80% of the motions in the dataset for over thirty seconds. In contrast, the current state-of-the-art reinforcement learning algorithm, Proximal Policy Optimization (PPO) produces a policy which cannot scale to such a diverse set of complex motions in such a short training time. After forty hours of training a policy with PPO, the policy can only track 5% of the motions beyond thirty seconds. Here are some of the challenging motions that our world model-based policy was able to learn using a single policy:

This world model-based approach can be applied to other physically-based character control tasks. For example, recent research demonstrated how to build real-time interactive character controllers with reinforcement learning. We use a similar system, but replace reinforcement learning with our world model-based solution. The world model solution was able to learn a character controller from thirty minutes of recorded data after just three hours of training.

Our approach is character-agnostic and can be applied to different animals if we have the animation data. For example, we built this dog controller by using motion capture of a real dog:

We also experimented with the more challenging task of training a ragdoll to navigate rough terrain. A large database of animations on uneven surfaces was collected and a heightmap terrain was generated from the animation data. For this task, the policy was given information about the heightmap below it so it could respond to changes in terrain height. The result is a ragdoll with some impressive parkour skills:

The World According to an Animated Ragdoll

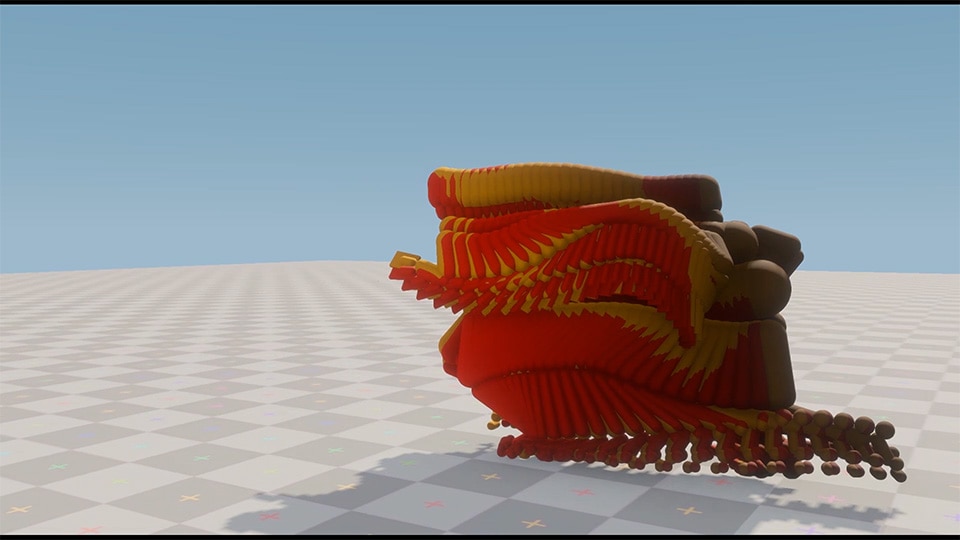

Finally, let’s visualize the predictions of the world model to see the ragdoll trajectories that the world model generates for all these challenging motions. Here we are showing the one-second trajectories of the world model before resetting it to the real ragdoll pose:

What we see is that the world model can learn some complex motions which include collisions with the floor, fast rotations of the character reference frame, and accurate hand and foot tracking. Overall, we were surprised by the quality of the world model predictions. Previous research on world models has used embedded representations to reduce the complexity of the world and enforce prediction stability, but our results show that with the right data representation and training setup, this wasn’t necessary.

World models are an exciting area of research. They benefit from faster training times, fewer environment samples, and stable policy training. World model-based learning moves us closer to the possibility of having physical character controllers that are quick to train and more reliable to deploy in-game – two of the major factors that limit their adoption in the games industry.

In terms of what’s next for physically-based character animation and world models, there are many rich veins of research to be explored. For example, transferring to new tasks might be easier with world model-based approaches than reinforcement learning because world models share many of the benefits of supervised learning, such as impressive generalization. We’ve shown some of the ways world models can be applied to physical character animation, but this approach can be used in place of reinforcement learning for much of the recent research in this field. For example, our early experiments combining world models and Adversarial Motion Priors (AMP) have shown promising results.

Final Remarks

Physics is slowly realizing its potential as a powerful tool for helping character animators. Its ability to add depth to gameplay by producing realistic and emergent character responses can’t be ignored. The work we have presented here starts to address two of the major difficulties with achieving a future of fully physics-based character controllers: iteration time and motion realism. Previous reinforcement learning-based research suffered from days to weeks of training time and suboptimal motion tracking. By reframing motion imitation as a supervised learning problem, our research shows that with a computational budget of only a few hours, it is possible to train high-quality, realistic character controllers for very complex motions.

Additional Resources

For more details about the research discussed in this post, please refer to our SIGGRAPH Asia 2021 paper SuperTrack: Motion Tracking for Physically Simulated Characters using Supervised Learning. Further information and results can also be found in the accompanying supplementary video below.