On September 4th, 2018, we deployed Operation Grim Sky to the live servers. The Grim Sky update came with improvements to the overall matchmaking flow aimed at making the initial game server selection process much smoother. Upon deployment, we noticed an increase in the number of matchmaking errors generated when compared to the Test Server phase. Players, primarily in North America, were unable to use the matchmaking system to join matches.

Below you will find a summary of what happened, and the steps we took to fix it.

The Matchmaking Process

Selecting the “Multiplayer” button for any of the online game modes, initiates a two-step process.

First, the game contacts our matchmaking services, searching for the best possible match. This step, depending on the game mode, your rank, and the current player population, typically averages 10-60 seconds (the degradation did not impact this step of the process).

Second, the client attempts to connect to the game server. This is the point at which the degradation occurred.

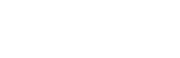

Connections to our game servers are an exchange of UDP (User Datagram Protocol) messages between the client and the server. From a high-level perspective, they look like this:

![[2018-09-14] MM degrad picture](https://ubistatic19-a.ubisoft.com/resource/en-ca/game/news/rainbow6/siege-v3/mm_degrad_335043.png)

The client negotiates a communication channel with the server, waits for a frame (~16ms) and then starts sending information (Hello) on that channel.

The Discovery

Through the investigation of this issue, we learned that there was a pre-existing bug in the server end of the connection: if the 4th message (Hello) reached the game server before the 3rd (Ack) within a very short time frame, the connection ends up in an error state and can’t recover. This will guarantee a 6-0x00001000 error (connection timed out).

In most cases, when messages are sent in a specific order, they are received in the same specific order. The reordering of messages is rare enough that the error itself is not frequent, but it is worth noting that it can also happen in legitimate cases - for instance: it can occur with a mis-configured firewall on the player’s side, which make it hard to track properly. Put another way, a small amount of those errors is nothing serious and is to be expected, but a huge amount is not and is considered abnormal. This is the primary reason why we were not able to immediately recognize the extent of the issue during the Test Server, due to a much smaller Test Server sample size.

The Evolution of the Degradation

One of the changes we made in Operation Grim Sky is an optimization where the client does not wait, and sends the 3rd and 4th messages immediately, still in sequence, in a very short time-frame. On most Internet Service Providers, this has no noticeable effect. On some ISPs, the two messages being sent were so closely timed, that it caused them to be reordered in most cases, making the issue a nearly guaranteed occurrence for players using that provider. This resulted from the low latency offered through the connections with that ISP. In essence, the lower the latency of a player’s connection, the higher likelihood of encountering this error.

Additionally, if at least one player in a squad is unable to join a match, the game server connection phase fails for everyone (2-0x0000D012). This was originally designed to ensure the integrity of squads while matchmaking, however, with so many players affected by the initial error, it triggered a cascade effect on those who joined an impacted player’s squad.

Once we identified and confirmed the problem, we were able to fix the game server to ensure this issue will not happen anymore moving forward.

Timeline to a Fix

Our entire first couple of days was spent trying to reproduce the issue to the best of our understanding of the situation at the time. Unfortunately, these efforts were not met with success. No matter how many different simulations of latency, jitter, and packet loss we experimented with, we were not able to reproduce it with enough confidence that we had found the actual root of the problem. Spending time and resources to try and “fix” something that is not the actual cause of the problem is detrimental to both us and the players as it only serves to prolong the life of the problem.

Luckily, many players reached out to us and offered their help. We worked closely with a few players that had an extremely high occurrence rate, and through their generosity and cooperation, we were able to make greater strides in our investigation. By the second debug session, we were confident that we had discovered the source of the problem and could then begin working towards an actual fix. By Sunday evening, we had finalized a fix, and after running a final debug session with our volunteer test case players on Monday to ensure the issue was no longer present, we felt that we had found the solution. The fix was immediately then scheduled to be deployed the following business day, on Tuesday.

Next Steps

One of the main issues we faced when attempting to ascertain the cause of the issue was due to the fact that it was released at the same time as a major Season update. This made it impossible to roll back as it would have meant un-releasing the entire Season.

For the future, we have begun preparations for improving our release process that will allow us to deploy new content – particularly new Seasonal content – separately from low-level, non-player facing changes. So in the event that similar problems happen again, we will have the ability to rollback if necessary.

We want to take this opportunity to once again express our gratitude to those players that offered their time to help us solve the issue for the whole Rainbow Six community. You are awesome!

Additionally, we are continuing to work on the crashes that players are encountering across all platforms. We have fixes for the majority of these coming with Patch 3.1.